Everything works at first, with ChatGPT being a sweet companion, but soon I realised it kept giving excessive affirmations that I didn't need and felt as if it was pacifying me with a lazy SOP. It started to not feel right, dragging me into an echo chamber with kind words that kept me away from the constructive criticism I needed to get better in the real world.

Using AI for mental health support has become a commonplace, but it can lure us away from what’s really important and lead to disastrous results if used incorrectly, just like the tragedy of Sewell Setzer. As a UX practitioner, there’s not one day when I don’t hear cheers or fear about how AI is taking over the industry. But with sensitive areas such as healthcare or mental health, leaving it all in the hands of AI is clearly not the best solution. This reminds me of a key takeaway from this year’s UX Healthcare London, as suggested by the former product designer of Wysa the mental health app, Diana Ayala, that AI should be a tool to help drive human-to-human connections instead of the core experience itself. On top of that, with the end-product being designed for humans, it’s essential to embrace a human-centred approach in the product development process, and here’s why.

Human-Centred UX Research Creates a Safe, Empathetic, and Nuanced Experience

So, why can't AI alone give us the experience we're looking for? It's because true empathy isn't about giving the same generic response to everyone. Without talking to real users, a product team just won't get the full picture: the cultural context, the preferred tone of voice, or a person's specific accessibility needs. When a product isn't tailored to different user groups, people won't feel understood or safe enough to really open up.

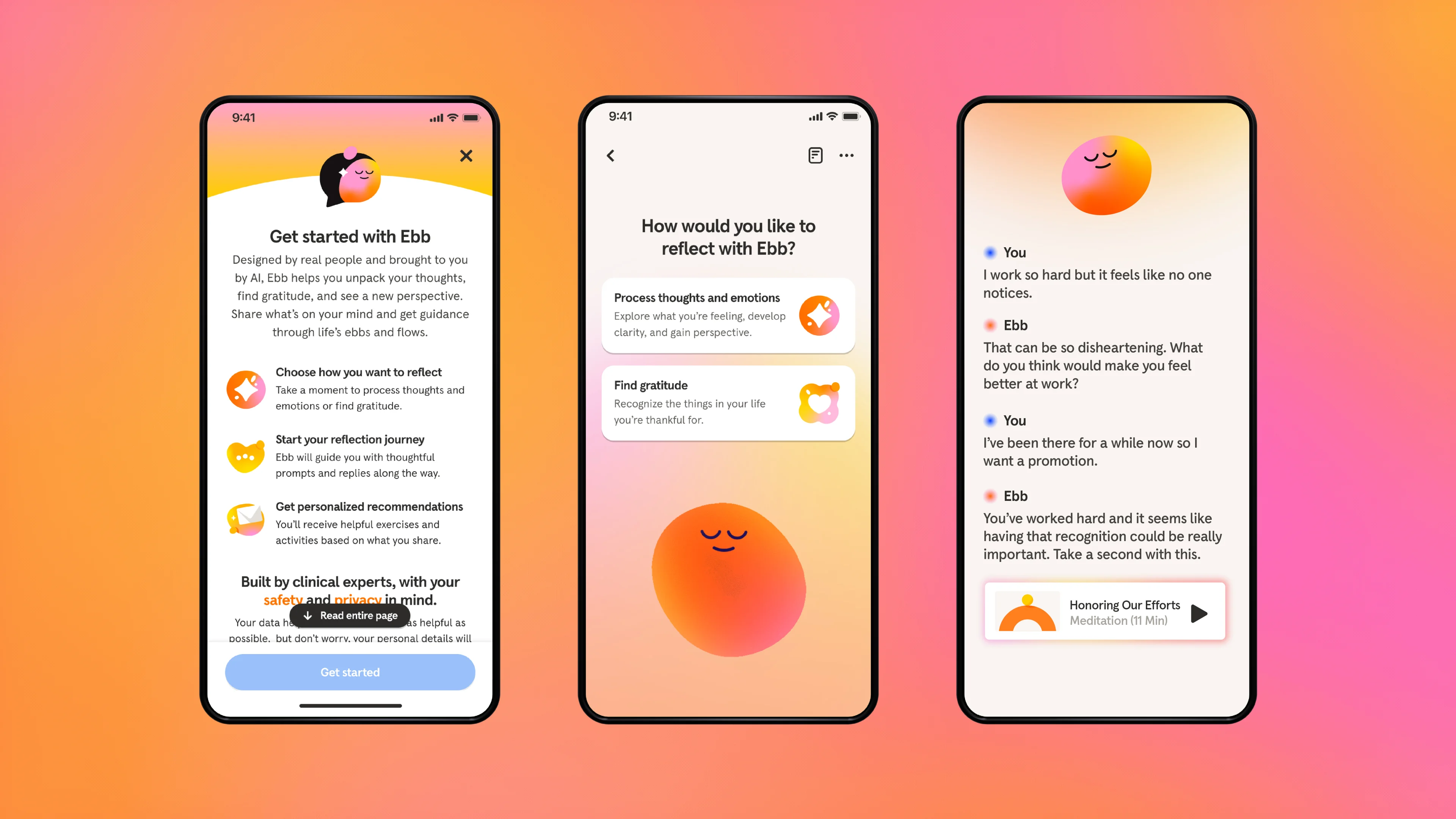

A great example of doing this right is Ebb by Headspace. The team didn't just build a chatbot, they worked with clinical psychologists and data scientists to build a conversational AI using evidence-based methods. They spent ages doing research and testing to get the tone just right, and it paid off. Headspace's own research found that users who engaged with Ebb had 34% higher app engagement, and a massive 64% of them said they felt heard and understood. That's a huge difference a human-centred approach can make.

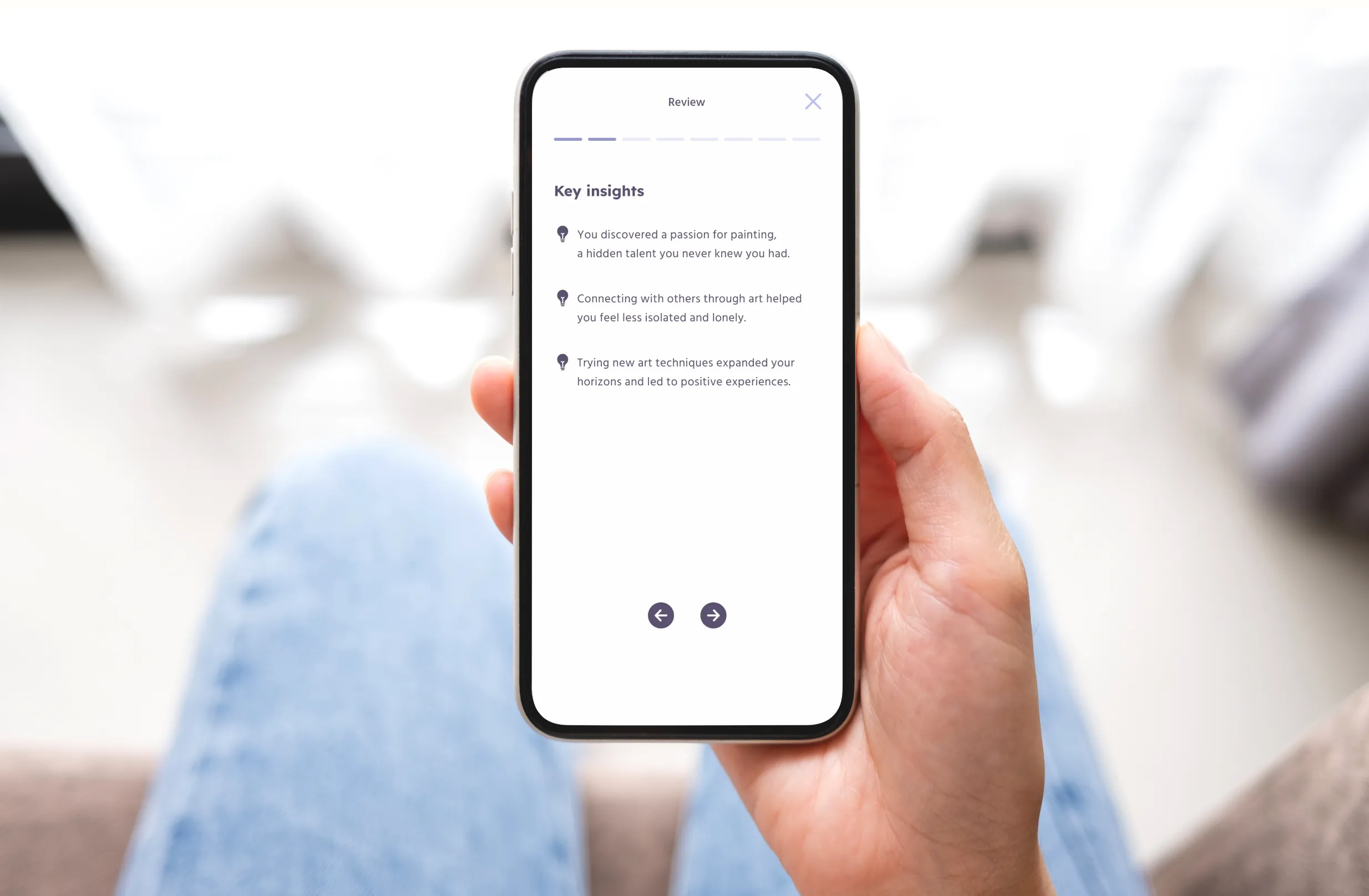

Another example is an app our team at Elixel created in collaboration with the University of Plymouth called Mysoc. It's a social prescription app that helps users self-reflect and track the impact of their social plans. (If you're not familiar with social prescribing, it's when a health professional connects a patient with non-clinical services, like art classes or gardening clubs, to improve their wellbeing.) We found that talking to pilot users was key. One participant told us that the tone felt safe because it didn't shame her for "doing nothing", which allowed her to celebrate small wins even on an uneventful day. We also learned that the LGBTQ+ community often has specific needs and expectations around language. This kind of research helps product teams train the AI to deliver an experience that’s actually relevant and genuinely makes a positive impact on someone's mental wellbeing.

Human-Centred Design Keeps the Focus on Real Human Connections

One of the biggest problems with relying too much on AI is that it only offers verbal comfort based on your entry. It’s not a human therapist who can guide you to build your own coping or problem-solving skills. As Diana Ayala pointed out, this can leave your real-life problems unresolved, getting you stuck in a loop of false positive feedback.

With a human-centred design approach, we can make sure AI is used in a controlled way, with patterns built in to prevent us from becoming too dependent on it.

Let's look at the Mysoc app again. Instead of making the AI the star of the show, we put the focus on the user's social activities and self-reflection. We only use AI to help summarise insights and patterns in their social prescription journey. This way, the user is focused on using the app to communicate with link workers and connect with people in their community, building real-life connections instead of becoming isolated by over-relying on a chatbot.

Looking at the Wysa app that we mentioned earlier, a great example of AI-powered product with humans at heart. Wysa uses AI as a smart tool to introduce users to suitable Cognitive Behavioural Therapy (CBT) tools that feel like you're talking to a human therapist. It helps users practice problem-solving instead of just dwelling on pure verbal relief that could actually worsen their situation in the long run. Wysa's effectiveness is proven, too. It has helped over 6 million people, with a 91% helpfulness rate reported by users. A study in Singapore even found that 91.6% of users who used it to reframe a negative thought were successful.

Human-Centred Product Teams Build Trust by Addressing Data Privacy

When it comes to something as personal and sensitive as mental health, we’re naturally concerned about data privacy. If we want users to trust a product, we need to show them that we get that.

This is where a multi-disciplinary team comes in. They need to work together to find safe ways to use AI, like looking for a secure and regulated AI model without data leak risks. They also need to make sure the privacy policy is super easy to understand—not full of legal jargon that no one reads.

Ebb by Headspace is a good example of this. The team understands that you’re sharing sensitive thoughts, so they made sure your conversations with Ebb are fully encrypted. They also have strict policies to ensure sensitive data is stored safely with minimal employee access. This isn't just a promise, it's a practice: they give users the control to delete a conversation anytime if things get sensitive, or delete their entire account and all associated data. They even have a specific process for users to revoke their consent to share data, which really shows a commitment to putting the user in control. That’s exactly how you build trust by putting users in the first place.

So, what's the big takeaway from all this? It’s not that we should be scared of or completely avoid AI. I think it's pretty obvious that AI can be a powerful tool, a real asset to us. But when we’re designing products, especially for something as personal as mental health, the human should always be at the very centre. When we put people first, their needs, their feelings, their trust, that's when we can use these tools to make a real, lasting impact.

If you have thoughts on this or want to chat about creating a truly impactful product or experience for people, feel free to reach out to us!

Newsletter

Sign up for monthly insights, concept designs and product tips

.svg)

.svg)